Digital platforms in retail and banking have enabled customers to experience convenience through personalized and tailored technologies for shopping and performing transactions. However, that convenience is also accompanied by the danger of fraud. Transaction frauds are growing every year, and organizations such as retailers and banks realized Artificial Intelligence models’ potential for automating the fraud detection task.

Besides automation, companies also employ fraud analysts, who are domain experts in fraud detection, to review suspicious transactions and protect customers. The challenge for those professionals is that they are knowledgeable about fraud detection, but not Artificial Intelligence inner workings. Therefore, there is a lack of trust of those experts towards AI partners. The EU has recognized this issue as well, which has established a working group to establish principles for developing trust between humans and AI applications.

Douglas Cirqueira, the PERFORM ESR 11, investigates how to enable a trustworthy relationship between those fraud experts and Artificial Intelligence for fighting fraud in retail and banking. Mainly, his research deals with the deployment of Explainable AI for providing explanations to fraud experts and enhancing their trust in such partners.

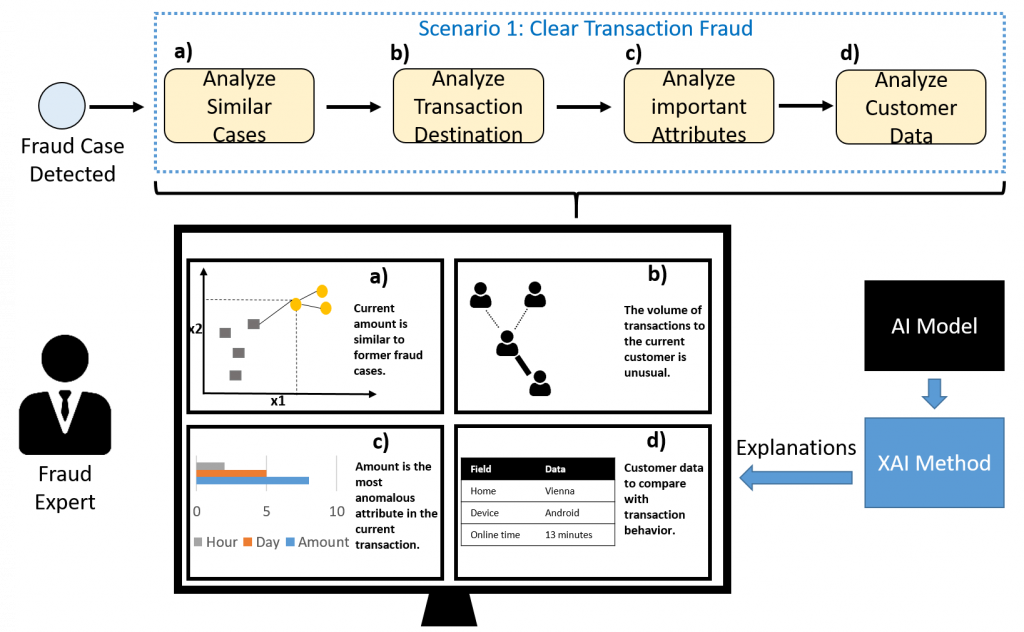

In his latest research paper published at the International Cross-Domain Conference for Machine Learning and Knowledge Extraction and CD-MAKE 2020, Douglas investigated the feasibility of a methodology that brings a Human-Computer Interaction and Information Systems perspective for the development of Explainable AI: Scenario-based design and requirements elicitation.

With this methodology, it was possible to uncover particular scenarios of fraud and experts’ decision-making context for reviewing suspicious cases. Those results have the potential to enable the deployment of explanation methods appropriate to each scenario, which can enhance the trust of professionals dealing with suspicious cases daily. Furthermore, designers and researchers in Explainable AI and HCI can better understand how to tailor and develop interfaces and explanation methods appropriate for this particular context. Moreover, an interesting aspect of the scenario methodology is that it could be applicable to different contexts, such as healthcare. In that case, doctors would have their context uncovered, which can help in better tailoring Explainable AI for their scenarios and trust in diagnosing diseases predicted by AI models.

As the next steps, Douglas is working on evaluating explanation methods and assessing the trust and performance of fraud experts partnering with Explainable AI, which can help keep the convenience and safety of customers in digital retail and banking sectors.

Read the full paper here: https://link.springer.com/chapter/10.1007/978-3-030-57321-8_18

Acknowledgements: This research was supported by the European Union Horizon 2020 research and innovation programme under the Marie Sklodowska-Curie grant agreement No. 765395; and supported, in part, by Science Foundation Ireland grant 13/RC/2094.